前言

本文记录在FoundationPose中,跑通基于CAD模型为输入的demo,输出位姿信息,可视化结果。

然后分享NeRF物体重建部分的训练,以及RGBD图为输入的demo。

1、搭建环境

方案1:基于docker镜像(推荐)

首先下载开源代码:https://github.com/NVlabs/FoundationPose

然后执行下面命令,拉取镜像,并构建镜像环境

cd docker/

docker pull wenbowen123/foundationpose && docker tag wenbowen123/foundationpose foundationpose

bash docker/run_container.sh

bash build_all.sh构建完成后,可以用docker exec 进入镜像容器中。

方案2:基于Conda(比较麻烦)

首先安装 Eigen3

cd $HOME && wget -q https://gitlab.com/libeigen/eigen/-/archive/3.4.0/eigen-3.4.0.tar.gz && \

tar -xzf eigen-3.4.0.tar.gz && \

cd eigen-3.4.0 && mkdir build && cd build

cmake .. -Wno-dev -DCMAKE_BUILD_TYPE=Release -DCMAKE_CXX_FLAGS=-std=c++14 ..

sudo make install

cd $HOME && rm -rf eigen-3.4.0 eigen-3.4.0.tar.gz然后参考下面命令,创建conda环境

# create conda environment

create -n foundationpose python=3.9

# activate conda environment

conda activate foundationpose

# install dependencies

python -m pip install -r requirements.txt

# Install NVDiffRast

python -m pip install --quiet --no-cache-dir git+https://github.com/NVlabs/nvdiffrast.git

# Kaolin (Optional, needed if running model-free setup)

python -m pip install --quiet --no-cache-dir kaolin==0.15.0 -f https://nvidia-kaolin.s3.us-east-2.amazonaws.com/torch-2.0.0_cu118.html

# PyTorch3D

python -m pip install --quiet --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/py39_cu118_pyt200/download.html

# Build extensions

CMAKE_PREFIX_PATH=$CONDA_PREFIX/lib/python3.9/site-packages/pybind11/share/cmake/pybind11 bash build_all_conda.sh2、基于CAD模型为输入的demo

首先,下载模型权重,里面包括两个文件夹;点击下载(模型权重)

在工程目录中创建weights/,将下面两个文件夹放到里面。

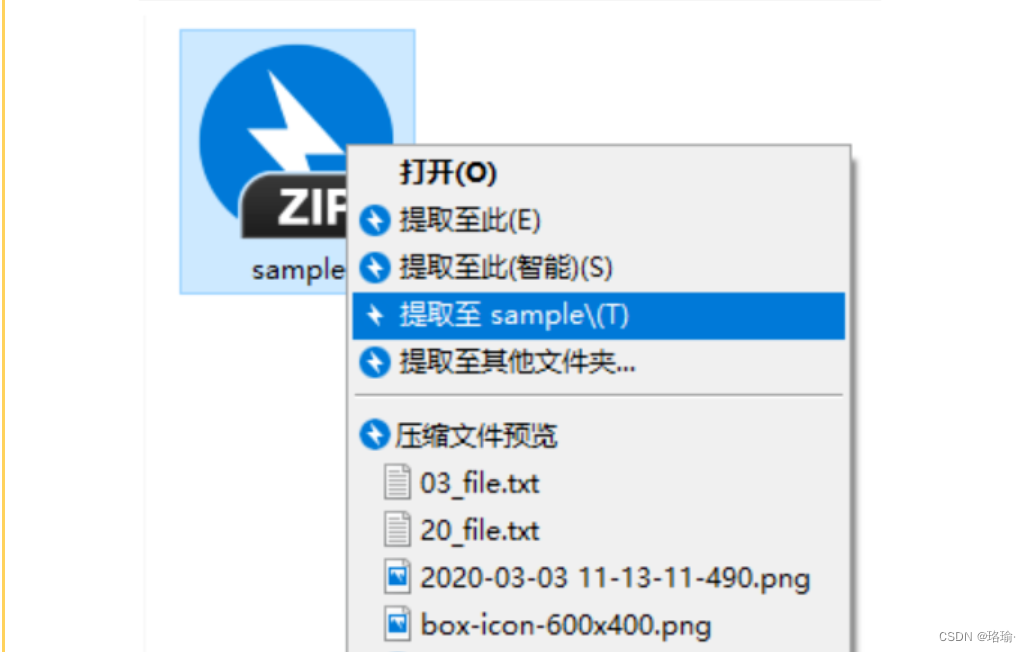

然后,下载测试数据,里面包括两个压缩文件;点击下载(demo数据)

在工程目录中创建demo_data/,加压文件,将下面两个文件放到里面。

运行 run_demo.py,实现CAD模型为输入的demo

python run_demo.py --debug 2如果是服务器运行,没有可视化的,需要注释两行代码:

if debug>=1:

center_pose = pose@np.linalg.inv(to_origin)

vis = draw_posed_3d_box(reader.K, img=color, ob_in_cam=center_pose, bbox=bbox)

vis = draw_xyz_axis(color, ob_in_cam=center_pose, scale=0.1, K=reader.K, thickness=3, transparency=0, is_input_rgb=True)

# cv2.imshow('1', vis[...,::-1])

# cv2.waitKey(1)

然后看到demo_data/mustard0/,里面生成了ob_in_cam、track_vis文件夹

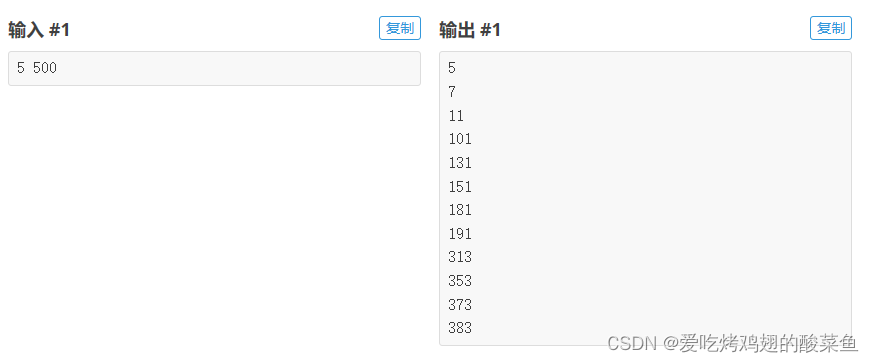

ob_in_cam 是位姿估计的结果,用txt文件存储,示例文件:

6.073544621467590332e-01 -2.560715079307556152e-01 7.520291209220886230e-01 -4.481770694255828857e-01

-7.755840420722961426e-01 -3.960975110530853271e-01 4.915038347244262695e-01 1.187708452343940735e-01

1.720167100429534912e-01 -8.817789554595947266e-01 -4.391765296459197998e-01 8.016449213027954102e-01

0.000000000000000000e+00 0.000000000000000000e+00 0.000000000000000000e+00 1.000000000000000000e+00

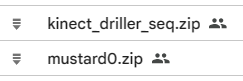

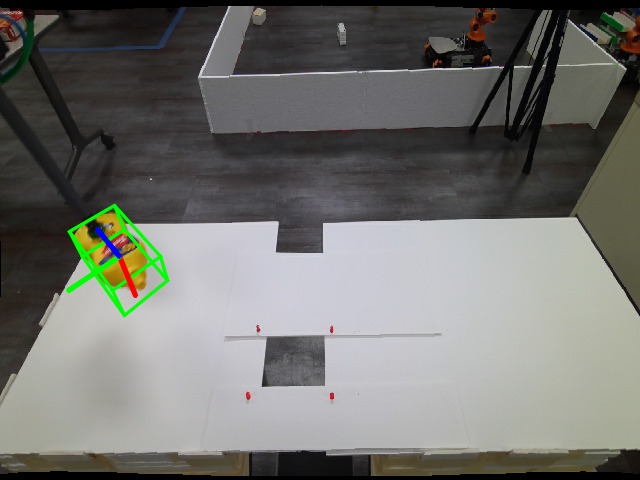

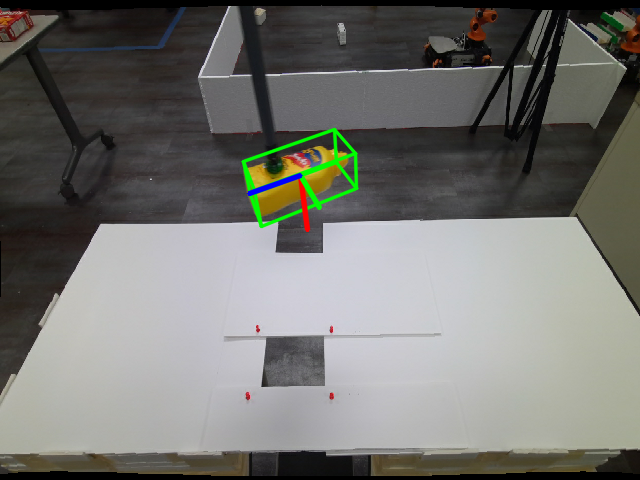

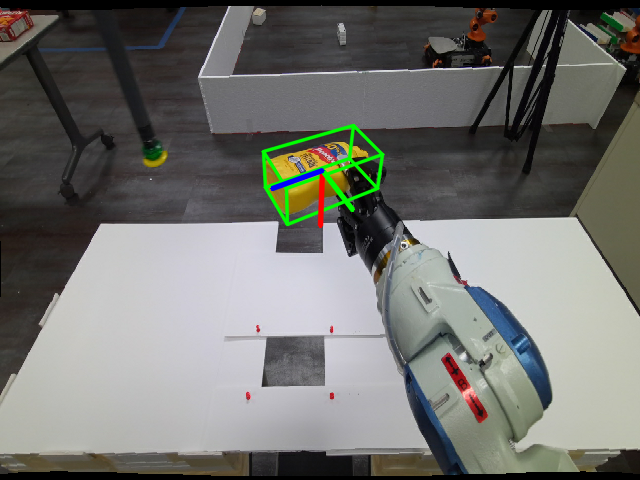

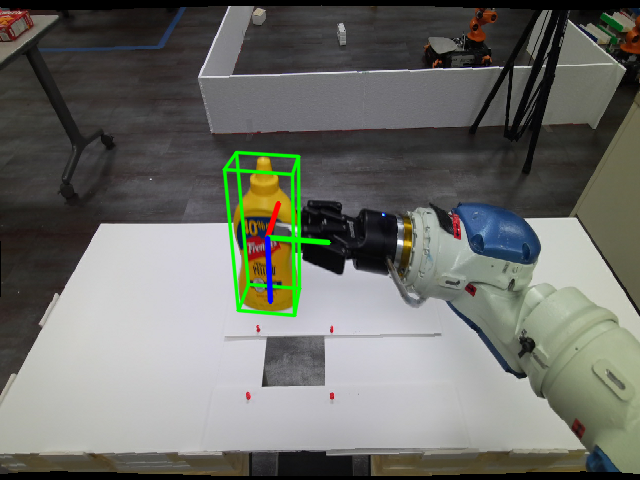

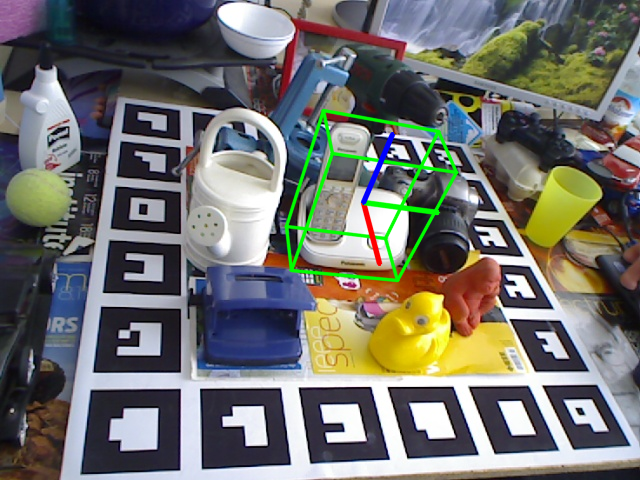

track_vis 是可视化结果,能看到多张图片:

3、NeRF物体重建训练

下载训练数据,Linemod和YCB-V两个公开数据集的示例:

点击下载(RGBD参考数据)

示例1:训练Linemod数据集

修改代码bundlesdf/run_nerf.py,修改为use_refined_mask=False,即98行:

mesh = run_one_ob(base_dir=base_dir, cfg=cfg, use_refined_mask=False)

然后执行命令:

python bundlesdf/run_nerf.py --ref_view_dir /DATASET/lm_ref_views --dataset linemod如果是服务器运行,没有可视化的,需要安装xvfb

sudo apt-get update

sudo apt-get install -y xvfb然后执行命令:

xvfb-run -s "-screen 0 1024x768x24" python bundlesdf/run_nerf.py --ref_view_dir model_free_ref_views/lm_ref_views --dataset linemod因为训练NeRF需要渲染的,使用xvfb进行模拟。

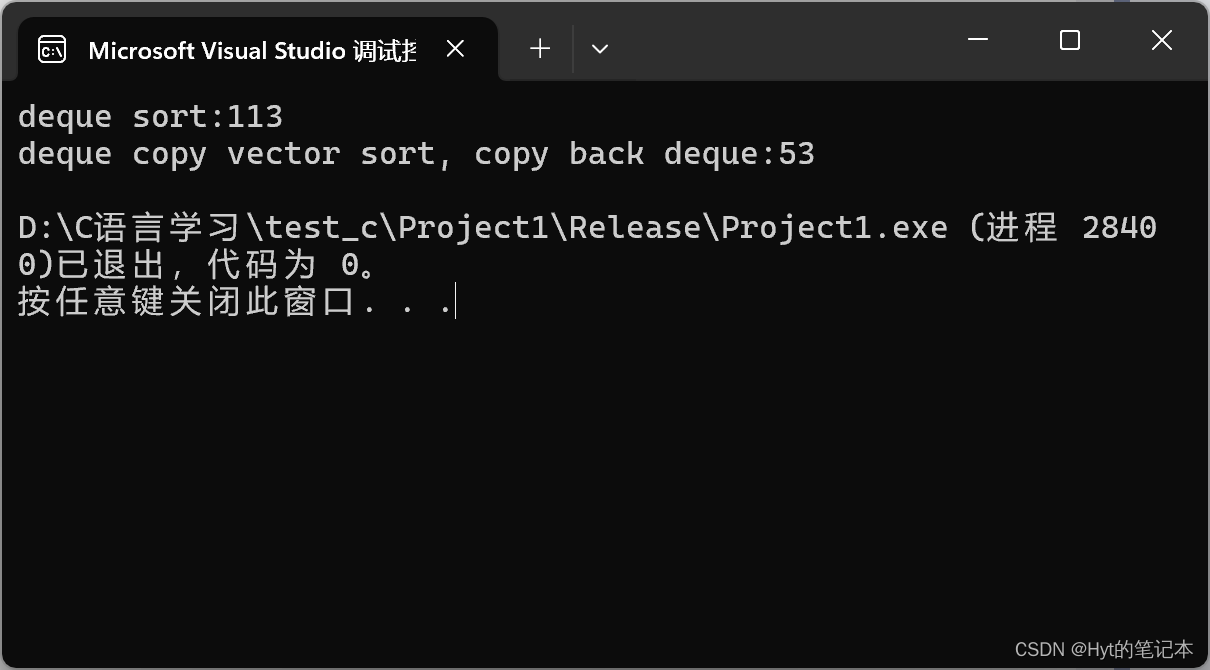

能看到打印信息:

bundlesdf/run_nerf.py:61: DeprecationWarning: Starting with ImageIO v3 the behavior of this function will switch to that of iio.v3.imread. To keep the current behavior (and make this warning disappear) use `import imageio.v2 as imageio` or call `imageio.v2.imread` directly.

rgb = imageio.imread(color_file)

[compute_scene_bounds()] compute_scene_bounds_worker start

[compute_scene_bounds()] compute_scene_bounds_worker done

[compute_scene_bounds()] merge pcd

[compute_scene_bounds()] compute_translation_scales done

translation_cvcam=[0.00024226 0.00356217 0.00056694], sc_factor=19.274929219577043

[build_octree()] Octree voxel dilate_radius:1

[__init__()] level:0, vox_pts:torch.Size([1, 3]), corner_pts:torch.Size([8, 3])

[__init__()] level:1, vox_pts:torch.Size([8, 3]), corner_pts:torch.Size([27, 3])

[__init__()] level:2, vox_pts:torch.Size([64, 3]), corner_pts:torch.Size([125, 3])

[draw()] level:2

[draw()] level:2

level 0, resolution: 32

level 1, resolution: 37

level 2, resolution: 43

level 3, resolution: 49

level 4, resolution: 56

level 5, resolution: 64

level 6, resolution: 74

level 7, resolution: 85

level 8, resolution: 98

level 9, resolution: 112

level 10, resolution: 128

level 11, resolution: 148

level 12, resolution: 169

level 13, resolution: 195

level 14, resolution: 223

level 15, resolution: 256

GridEncoder: input_dim=3 n_levels=16 level_dim=2 resolution=32 -> 256 per_level_scale=1.1487 params=(26463840, 2) gridtype=hash align_corners=False

sc_factor 19.274929219577043

translation [0.00024226 0.00356217 0.00056694]

[__init__()] denoise cloud

[__init__()] Denoising rays based on octree cloud

[__init__()] bad_mask#=3

rays torch.Size([128387, 12])

[train()] train progress 0/1001

[train_loop()] Iter: 0, valid_samples: 524161/524288, valid_rays: 2048/2048, loss: 309.0942383, rgb_loss: 0.0216732, rgb0_loss: 0.0000000, fs_rgb_loss: 0.0000000, depth_loss: 0.0000000, depth_loss0: 0.0000000, fs_loss: 301.6735840, point_cloud_loss: 0.0000000, point_cloud_normal_loss: 0.0000000, sdf_loss: 7.2143111, eikonal_loss: 0.0000000, variation_loss: 0.0000000, truncation(meter): 0.0100000, pose_reg: 0.0000000, reg_features: 0.1152707,

[train()] train progress 100/1001

[train()] train progress 200/1001

[train()] train progress 300/1001

[train()] train progress 400/1001

[train()] train progress 500/1001

Saved checkpoints at model_free_ref_views/lm_ref_views/ob_0000001/nerf/model_latest.pth

[train_loop()] Iter: 500, valid_samples: 518554/524288, valid_rays: 2026/2048, loss: 1.0530750, rgb_loss: 0.0009063, rgb0_loss: 0.0000000, fs_rgb_loss: 0.0000000, depth_loss: 0.0000000, depth_loss0: 0.0000000, fs_loss: 0.2142579, point_cloud_loss: 0.0000000, point_cloud_normal_loss: 0.0000000, sdf_loss: 0.8360301, eikonal_loss: 0.0000000, variation_loss: 0.0000000, truncation(meter): 0.0100000, pose_reg: 0.0000000, reg_features: 0.0008409,

[extract_mesh()] query_pts:torch.Size([42875, 3]), valid:42875

[extract_mesh()] Running Marching Cubes

[extract_mesh()] done V:(4536, 3), F:(8986, 3)

[train()] train progress 600/1001

[train()] train progress 700/1001

[train()] train progress 800/1001

[train()] train progress 900/1001

[train()] train progress 1000/1001

Saved checkpoints at model_free_ref_views/lm_ref_views/ob_0000001/nerf/model_latest.pth

[train_loop()] Iter: 1000, valid_samples: 519351/524288, valid_rays: 2029/2048, loss: 0.4827633, rgb_loss: 0.0006563, rgb0_loss: 0.0000000, fs_rgb_loss: 0.0000000, depth_loss: 0.0000000, depth_loss0: 0.0000000, fs_loss: 0.0935674, point_cloud_loss: 0.0000000, point_cloud_normal_loss: 0.0000000, sdf_loss: 0.3876466, eikonal_loss: 0.0000000, variation_loss: 0.0000000, truncation(meter): 0.0100000, pose_reg: 0.0000000, reg_features: 0.0001022,

[extract_mesh()] query_pts:torch.Size([42875, 3]), valid:42875

[extract_mesh()] Running Marching Cubes

[extract_mesh()] done V:(5265, 3), F:(10328, 3)

[extract_mesh()] query_pts:torch.Size([42875, 3]), valid:42875

[extract_mesh()] Running Marching Cubes

[extract_mesh()] done V:(5265, 3), F:(10328, 3)

[<module>()] OpenGL_accelerate module loaded

[<module>()] Using accelerated ArrayDatatype

[mesh_texture_from_train_images()] Texture: Texture map computation

project train_images 0/16

project train_images 1/16

project train_images 2/16

project train_images 3/16

project train_images 4/16

project train_images 5/16

project train_images 6/16

project train_images 7/16

project train_images 8/16

project train_images 9/16

project train_images 10/16

project train_images 11/16

project train_images 12/16

project train_images 13/16

project train_images 14/16

project train_images 15/16

重点留意,损失的变化:

[train()] train progress 0/1001

[train_loop()] Iter: 0, valid_samples: 524161/524288, valid_rays: 2048/2048, loss: 309.0942383, rgb_loss: 0.0216732, rgb0_loss: 0.0000000, fs_rgb_loss: 0.0000000, depth_loss: 0.0000000, depth_loss0: 0.0000000, fs_loss: 301.6735840, point_cloud_loss: 0.0000000, point_cloud_normal_loss: 0.0000000, sdf_loss: 7.2143111, eikonal_loss: 0.0000000, variation_loss: 0.0000000, truncation(meter): 0.0100000, pose_reg: 0.0000000, reg_features: 0.1152707,[train()] train progress 100/1001

[train()] train progress 200/1001

[train()] train progress 300/1001

[train()] train progress 400/1001

[train()] train progress 500/1001

Saved checkpoints at model_free_ref_views/lm_ref_views/ob_0000001/nerf/model_latest.pth

[train_loop()] Iter: 500, valid_samples: 518554/524288, valid_rays: 2026/2048, loss: 1.0530750, rgb_loss: 0.0009063, rgb0_loss: 0.0000000, fs_rgb_loss: 0.0000000, depth_loss: 0.0000000, depth_loss0: 0.0000000, fs_loss: 0.2142579, point_cloud_loss: 0.0000000, point_cloud_normal_loss: 0.0000000, sdf_loss: 0.8360301, eikonal_loss: 0.0000000, variation_loss: 0.0000000, truncation(meter): 0.0100000, pose_reg: 0.0000000, reg_features: 0.0008409,

默认训练1000轮,训练也挺快的。

在lm_ref_views/ob_0000001/中生成了nerf文件夹,存放下面文件:

在lm_ref_views/ob_0000001/model中,生成了的model.obj,后续模型推理或demo直接使用它。

示例2:训练YCB-V数据集

python bundlesdf/run_nerf.py --ref_view_dir /DATASET/ycbv/ref_views_16 --dataset ycbv如果是服务器运行,没有可视化的,需要安装xvfb

sudo apt-get update

sudo apt-get install -y xvfb然后执行命令:

xvfb-run -s "-screen 0 1024x768x24" python bundlesdf/run_nerf.py --ref_view_dir /DATASET/ycbv/ref_views_16 --dataset ycbv因为训练NeRF需要渲染的,使用xvfb进行模拟。

4、RGBD图输入demo

这里以Linemod数据集为示例,首先下面测试数据集

点击下载(测试数据)

然后加压文件,存放路径:FoundationPose-main/model_free_ref_views/lm_test_all

官方代码有问题,需要替换两个代码:datareader.py、run_linemod.py

run_linemod.py

# Copyright (c) 2023, NVIDIA CORPORATION. All rights reserved.

#

# NVIDIA CORPORATION and its licensors retain all intellectual property

# and proprietary rights in and to this software, related documentation

# and any modifications thereto. Any use, reproduction, disclosure or

# distribution of this software and related documentation without an express

# license agreement from NVIDIA CORPORATION is strictly prohibited.

from Utils import *

import json,uuid,joblib,os,sys

import scipy.spatial as spatial

from multiprocessing import Pool

import multiprocessing

from functools import partial

from itertools import repeat

import itertools

from datareader import *

from estimater import *

code_dir = os.path.dirname(os.path.realpath(__file__))

sys.path.append(f'{code_dir}/mycpp/build')

import yaml

import re

def get_mask(reader, i_frame, ob_id, detect_type):

if detect_type=='box':

mask = reader.get_mask(i_frame, ob_id)

H,W = mask.shape[:2]

vs,us = np.where(mask>0)

umin = us.min()

umax = us.max()

vmin = vs.min()

vmax = vs.max()

valid = np.zeros((H,W), dtype=bool)

valid[vmin:vmax,umin:umax] = 1

elif detect_type=='mask':

mask = reader.get_mask(i_frame, ob_id)

if mask is None:

return None

valid = mask>0

elif detect_type=='detected':

mask = cv2.imread(reader.color_files[i_frame].replace('rgb','mask_cosypose'), -1)

valid = mask==ob_id

else:

raise RuntimeError

return valid

def run_pose_estimation_worker(reader, i_frames, est:FoundationPose=None, debug=0, ob_id=None, device='cuda:0'):

torch.cuda.set_device(device)

est.to_device(device)

est.glctx = dr.RasterizeCudaContext(device=device)

result = NestDict()

for i, i_frame in enumerate(i_frames):

logging.info(f"{i}/{len(i_frames)}, i_frame:{i_frame}, ob_id:{ob_id}")

print("\n### ", f"{i}/{len(i_frames)}, i_frame:{i_frame}, ob_id:{ob_id}")

video_id = reader.get_video_id()

color = reader.get_color(i_frame)

depth = reader.get_depth(i_frame)

id_str = reader.id_strs[i_frame]

H,W = color.shape[:2]

debug_dir =est.debug_dir

ob_mask = get_mask(reader, i_frame, ob_id, detect_type=detect_type)

if ob_mask is None:

logging.info("ob_mask not found, skip")

result[video_id][id_str][ob_id] = np.eye(4)

return result

est.gt_pose = reader.get_gt_pose(i_frame, ob_id)

pose = est.register(K=reader.K, rgb=color, depth=depth, ob_mask=ob_mask, ob_id=ob_id)

logging.info(f"pose:\n{pose}")

if debug>=3:

m = est.mesh_ori.copy()

tmp = m.copy()

tmp.apply_transform(pose)

tmp.export(f'{debug_dir}/model_tf.obj')

result[video_id][id_str][ob_id] = pose

return result, pose

def run_pose_estimation():

wp.force_load(device='cuda')

reader_tmp = LinemodReader(opt.linemod_dir, split=None)

print("## opt.linemod_dir:", opt.linemod_dir)

debug = opt.debug

use_reconstructed_mesh = opt.use_reconstructed_mesh

debug_dir = opt.debug_dir

res = NestDict()

glctx = dr.RasterizeCudaContext()

mesh_tmp = trimesh.primitives.Box(extents=np.ones((3)), transform=np.eye(4)).to_mesh()

est = FoundationPose(model_pts=mesh_tmp.vertices.copy(), model_normals=mesh_tmp.vertex_normals.copy(), symmetry_tfs=None, mesh=mesh_tmp, scorer=None, refiner=None, glctx=glctx, debug_dir=debug_dir, debug=debug)

# ob_id

match = re.search(r'\d+$', opt.linemod_dir)

if match:

last_number = match.group()

ob_id = int(last_number)

else:

print("No digits found at the end of the string")

# for ob_id in reader_tmp.ob_ids:

if ob_id:

if use_reconstructed_mesh:

print("## ob_id:", ob_id)

print("## opt.linemod_dir:", opt.linemod_dir)

print("## opt.ref_view_dir:", opt.ref_view_dir)

mesh = reader_tmp.get_reconstructed_mesh(ref_view_dir=opt.ref_view_dir)

else:

mesh = reader_tmp.get_gt_mesh(ob_id)

# symmetry_tfs = reader_tmp.symmetry_tfs[ob_id] # !!!!!!!!!!!!!!!!

args = []

reader = LinemodReader(opt.linemod_dir, split=None)

video_id = reader.get_video_id()

# est.reset_object(model_pts=mesh.vertices.copy(), model_normals=mesh.vertex_normals.copy(), symmetry_tfs=symmetry_tfs, mesh=mesh) # raw

est.reset_object(model_pts=mesh.vertices.copy(), model_normals=mesh.vertex_normals.copy(), mesh=mesh) # !!!!!!!!!!!!!!!!

print("### len(reader.color_files):", len(reader.color_files))

for i in range(len(reader.color_files)):

args.append((reader, [i], est, debug, ob_id, "cuda:0"))

# vis Data

to_origin, extents = trimesh.bounds.oriented_bounds(mesh)

bbox = np.stack([-extents/2, extents/2], axis=0).reshape(2,3)

os.makedirs(f'{opt.linemod_dir}/track_vis', exist_ok=True)

outs = []

i = 0

for arg in args[:200]:

print("### num:", i)

out, pose = run_pose_estimation_worker(*arg)

outs.append(out)

center_pose = pose@np.linalg.inv(to_origin)

img_color = reader.get_color(i)

vis = draw_posed_3d_box(reader.K, img=img_color, ob_in_cam=center_pose, bbox=bbox)

vis = draw_xyz_axis(img_color, ob_in_cam=center_pose, scale=0.1, K=reader.K, thickness=3, transparency=0, is_input_rgb=True)

imageio.imwrite(f'{opt.linemod_dir}/track_vis/{reader.id_strs[i]}.png', vis)

i = i + 1

for out in outs:

for video_id in out:

for id_str in out[video_id]:

for ob_id in out[video_id][id_str]:

res[video_id][id_str][ob_id] = out[video_id][id_str][ob_id]

with open(f'{opt.debug_dir}/linemod_res.yml','w') as ff:

yaml.safe_dump(make_yaml_dumpable(res), ff)

print("Save linemod_res.yml OK !!!")

if __name__=='__main__':

parser = argparse.ArgumentParser()

code_dir = os.path.dirname(os.path.realpath(__file__))

parser.add_argument('--linemod_dir', type=str, default="/guopu/FoundationPose-main/model_free_ref_views/lm_test_all/000015", help="linemod root dir") # lm_test_all lm_test

parser.add_argument('--use_reconstructed_mesh', type=int, default=1)

parser.add_argument('--ref_view_dir', type=str, default="/guopu/FoundationPose-main/model_free_ref_views/lm_ref_views/ob_0000015")

parser.add_argument('--debug', type=int, default=0)

parser.add_argument('--debug_dir', type=str, default=f'/guopu/FoundationPose-main/model_free_ref_views/lm_test_all/debug') # lm_test_all lm_test

opt = parser.parse_args()

set_seed(0)

detect_type = 'mask' # mask / box / detected

run_pose_estimation()

datareader.py

# Copyright (c) 2023, NVIDIA CORPORATION. All rights reserved.

#

# NVIDIA CORPORATION and its licensors retain all intellectual property

# and proprietary rights in and to this software, related documentation

# and any modifications thereto. Any use, reproduction, disclosure or

# distribution of this software and related documentation without an express

# license agreement from NVIDIA CORPORATION is strictly prohibited.

from Utils import *

import json,os,sys

BOP_LIST = ['lmo','tless','ycbv','hb','tudl','icbin','itodd']

BOP_DIR = os.getenv('BOP_DIR')

def get_bop_reader(video_dir, zfar=np.inf):

if 'ycbv' in video_dir or 'YCB' in video_dir:

return YcbVideoReader(video_dir, zfar=zfar)

if 'lmo' in video_dir or 'LINEMOD-O' in video_dir:

return LinemodOcclusionReader(video_dir, zfar=zfar)

if 'tless' in video_dir or 'TLESS' in video_dir:

return TlessReader(video_dir, zfar=zfar)

if 'hb' in video_dir:

return HomebrewedReader(video_dir, zfar=zfar)

if 'tudl' in video_dir:

return TudlReader(video_dir, zfar=zfar)

if 'icbin' in video_dir:

return IcbinReader(video_dir, zfar=zfar)

if 'itodd' in video_dir:

return ItoddReader(video_dir, zfar=zfar)

else:

raise RuntimeError

def get_bop_video_dirs(dataset):

if dataset=='ycbv':

video_dirs = sorted(glob.glob(f'{BOP_DIR}/ycbv/test/*'))

elif dataset=='lmo':

video_dirs = sorted(glob.glob(f'{BOP_DIR}/lmo/lmo_test_bop19/test/*'))

elif dataset=='tless':

video_dirs = sorted(glob.glob(f'{BOP_DIR}/tless/tless_test_primesense_bop19/test_primesense/*'))

elif dataset=='hb':

video_dirs = sorted(glob.glob(f'{BOP_DIR}/hb/hb_test_primesense_bop19/test_primesense/*'))

elif dataset=='tudl':

video_dirs = sorted(glob.glob(f'{BOP_DIR}/tudl/tudl_test_bop19/test/*'))

elif dataset=='icbin':

video_dirs = sorted(glob.glob(f'{BOP_DIR}/icbin/icbin_test_bop19/test/*'))

elif dataset=='itodd':

video_dirs = sorted(glob.glob(f'{BOP_DIR}/itodd/itodd_test_bop19/test/*'))

else:

raise RuntimeError

return video_dirs

class YcbineoatReader:

def __init__(self,video_dir, downscale=1, shorter_side=None, zfar=np.inf):

self.video_dir = video_dir

self.downscale = downscale

self.zfar = zfar

self.color_files = sorted(glob.glob(f"{self.video_dir}/rgb/*.png"))

self.K = np.loadtxt(f'{video_dir}/cam_K.txt').reshape(3,3)

self.id_strs = []

for color_file in self.color_files:

id_str = os.path.basename(color_file).replace('.png','')

self.id_strs.append(id_str)

self.H,self.W = cv2.imread(self.color_files[0]).shape[:2]

if shorter_side is not None:

self.downscale = shorter_side/min(self.H, self.W)

self.H = int(self.H*self.downscale)

self.W = int(self.W*self.downscale)

self.K[:2] *= self.downscale

self.gt_pose_files = sorted(glob.glob(f'{self.video_dir}/annotated_poses/*'))

self.videoname_to_object = {

'bleach0': "021_bleach_cleanser",

'bleach_hard_00_03_chaitanya': "021_bleach_cleanser",

'cracker_box_reorient': '003_cracker_box',

'cracker_box_yalehand0': '003_cracker_box',

'mustard0': '006_mustard_bottle',

'mustard_easy_00_02': '006_mustard_bottle',

'sugar_box1': '004_sugar_box',

'sugar_box_yalehand0': '004_sugar_box',

'tomato_soup_can_yalehand0': '005_tomato_soup_can',

}

def get_video_name(self):

return self.video_dir.split('/')[-1]

def __len__(self):

return len(self.color_files)

def get_gt_pose(self,i):

try:

pose = np.loadtxt(self.gt_pose_files[i]).reshape(4,4)

return pose

except:

logging.info("GT pose not found, return None")

return None

def get_color(self,i):

color = imageio.imread(self.color_files[i])[...,:3]

color = cv2.resize(color, (self.W,self.H), interpolation=cv2.INTER_NEAREST)

return color

def get_mask(self,i):

mask = cv2.imread(self.color_files[i].replace('rgb','masks'),-1)

if len(mask.shape)==3:

for c in range(3):

if mask[...,c].sum()>0:

mask = mask[...,c]

break

mask = cv2.resize(mask, (self.W,self.H), interpolation=cv2.INTER_NEAREST).astype(bool).astype(np.uint8)

return mask

def get_depth(self,i):

depth = cv2.imread(self.color_files[i].replace('rgb','depth'),-1)/1e3

depth = cv2.resize(depth, (self.W,self.H), interpolation=cv2.INTER_NEAREST)

depth[(depth<0.1) | (depth>=self.zfar)] = 0

return depth

def get_xyz_map(self,i):

depth = self.get_depth(i)

xyz_map = depth2xyzmap(depth, self.K)

return xyz_map

def get_occ_mask(self,i):

hand_mask_file = self.color_files[i].replace('rgb','masks_hand')

occ_mask = np.zeros((self.H,self.W), dtype=bool)

if os.path.exists(hand_mask_file):

occ_mask = occ_mask | (cv2.imread(hand_mask_file,-1)>0)

right_hand_mask_file = self.color_files[i].replace('rgb','masks_hand_right')

if os.path.exists(right_hand_mask_file):

occ_mask = occ_mask | (cv2.imread(right_hand_mask_file,-1)>0)

occ_mask = cv2.resize(occ_mask, (self.W,self.H), interpolation=cv2.INTER_NEAREST)

return occ_mask.astype(np.uint8)

def get_gt_mesh(self):

ob_name = self.videoname_to_object[self.get_video_name()]

YCB_VIDEO_DIR = os.getenv('YCB_VIDEO_DIR')

mesh = trimesh.load(f'{YCB_VIDEO_DIR}/models/{ob_name}/textured_simple.obj')

return mesh

class BopBaseReader:

def __init__(self, base_dir, zfar=np.inf, resize=1):

self.base_dir = base_dir

self.resize = resize

self.dataset_name = None

self.color_files = sorted(glob.glob(f"{self.base_dir}/rgb/*"))

if len(self.color_files)==0:

self.color_files = sorted(glob.glob(f"{self.base_dir}/gray/*"))

self.zfar = zfar

self.K_table = {}

with open(f'{self.base_dir}/scene_camera.json','r') as ff:

info = json.load(ff)

for k in info:

self.K_table[f'{int(k):06d}'] = np.array(info[k]['cam_K']).reshape(3,3)

self.bop_depth_scale = info[k]['depth_scale']

if os.path.exists(f'{self.base_dir}/scene_gt.json'):

with open(f'{self.base_dir}/scene_gt.json','r') as ff:

self.scene_gt = json.load(ff)

self.scene_gt = copy.deepcopy(self.scene_gt) # Release file handle to be pickle-able by joblib

assert len(self.scene_gt)==len(self.color_files)

else:

self.scene_gt = None

self.make_id_strs()

def make_scene_ob_ids_dict(self):

with open(f'{BOP_DIR}/{self.dataset_name}/test_targets_bop19.json','r') as ff:

self.scene_ob_ids_dict = {}

data = json.load(ff)

for d in data:

if d['scene_id']==self.get_video_id():

id_str = f"{d['im_id']:06d}"

if id_str not in self.scene_ob_ids_dict:

self.scene_ob_ids_dict[id_str] = []

self.scene_ob_ids_dict[id_str] += [d['obj_id']]*d['inst_count']

def get_K(self, i_frame):

K = self.K_table[self.id_strs[i_frame]]

if self.resize!=1:

K[:2,:2] *= self.resize

return K

def get_video_dir(self):

video_id = int(self.base_dir.rstrip('/').split('/')[-1])

return video_id

def make_id_strs(self):

self.id_strs = []

for i in range(len(self.color_files)):

name = os.path.basename(self.color_files[i]).split('.')[0]

self.id_strs.append(name)

def get_instance_ids_in_image(self, i_frame:int):

ob_ids = []

if self.scene_gt is not None:

name = int(os.path.basename(self.color_files[i_frame]).split('.')[0])

for k in self.scene_gt[str(name)]:

ob_ids.append(k['obj_id'])

elif self.scene_ob_ids_dict is not None:

return np.array(self.scene_ob_ids_dict[self.id_strs[i_frame]])

else:

mask_dir = os.path.dirname(self.color_files[0]).replace('rgb','mask_visib')

id_str = self.id_strs[i_frame]

mask_files = sorted(glob.glob(f'{mask_dir}/{id_str}_*.png'))

ob_ids = []

for mask_file in mask_files:

ob_id = int(os.path.basename(mask_file).split('.')[0].split('_')[1])

ob_ids.append(ob_id)

ob_ids = np.asarray(ob_ids)

return ob_ids

def get_gt_mesh_file(self, ob_id):

raise RuntimeError("You should override this")

def get_color(self,i):

color = imageio.imread(self.color_files[i])

if len(color.shape)==2:

color = np.tile(color[...,None], (1,1,3)) # Gray to RGB

if self.resize!=1:

color = cv2.resize(color, fx=self.resize, fy=self.resize, dsize=None)

return color

def get_depth(self,i, filled=False):

if filled:

depth_file = self.color_files[i].replace('rgb','depth_filled')

depth_file = f'{os.path.dirname(depth_file)}/0{os.path.basename(depth_file)}'

depth = cv2.imread(depth_file,-1)/1e3

else:

depth_file = self.color_files[i].replace('rgb','depth').replace('gray','depth')

depth = cv2.imread(depth_file,-1)*1e-3*self.bop_depth_scale

if self.resize!=1:

depth = cv2.resize(depth, fx=self.resize, fy=self.resize, dsize=None, interpolation=cv2.INTER_NEAREST)

depth[depth<0.1] = 0

depth[depth>self.zfar] = 0

return depth

def get_xyz_map(self,i):

depth = self.get_depth(i)

xyz_map = depth2xyzmap(depth, self.get_K(i))

return xyz_map

def get_mask(self, i_frame:int, ob_id:int, type='mask_visib'):

'''

@type: mask_visib (only visible part) / mask (projected mask from whole model)

'''

pos = 0

name = int(os.path.basename(self.color_files[i_frame]).split('.')[0])

if self.scene_gt is not None:

for k in self.scene_gt[str(name)]:

if k['obj_id']==ob_id:

break

pos += 1

mask_file = f'{self.base_dir}/{type}/{name:06d}_{pos:06d}.png'

if not os.path.exists(mask_file):

logging.info(f'{mask_file} not found')

return None

else:

# mask_dir = os.path.dirname(self.color_files[0]).replace('rgb',type)

# mask_file = f'{mask_dir}/{self.id_strs[i_frame]}_{ob_id:06d}.png'

raise RuntimeError

mask = cv2.imread(mask_file, -1)

if self.resize!=1:

mask = cv2.resize(mask, fx=self.resize, fy=self.resize, dsize=None, interpolation=cv2.INTER_NEAREST)

return mask>0

def get_gt_mesh(self, ob_id:int):

mesh_file = self.get_gt_mesh_file(ob_id)

mesh = trimesh.load(mesh_file)

mesh.vertices *= 1e-3

return mesh

def get_model_diameter(self, ob_id):

dir = os.path.dirname(self.get_gt_mesh_file(self.ob_ids[0]))

info_file = f'{dir}/models_info.json'

with open(info_file,'r') as ff:

info = json.load(ff)

return info[str(ob_id)]['diameter']/1e3

def get_gt_poses(self, i_frame, ob_id):

gt_poses = []

name = int(self.id_strs[i_frame])

for i_k, k in enumerate(self.scene_gt[str(name)]):

if k['obj_id']==ob_id:

cur = np.eye(4)

cur[:3,:3] = np.array(k['cam_R_m2c']).reshape(3,3)

cur[:3,3] = np.array(k['cam_t_m2c'])/1e3

gt_poses.append(cur)

return np.asarray(gt_poses).reshape(-1,4,4)

def get_gt_pose(self, i_frame:int, ob_id, mask=None, use_my_correction=False):

ob_in_cam = np.eye(4)

best_iou = -np.inf

best_gt_mask = None

name = int(self.id_strs[i_frame])

for i_k, k in enumerate(self.scene_gt[str(name)]):

if k['obj_id']==ob_id:

cur = np.eye(4)

cur[:3,:3] = np.array(k['cam_R_m2c']).reshape(3,3)

cur[:3,3] = np.array(k['cam_t_m2c'])/1e3

if mask is not None: # When multi-instance exists, use mask to determine which one

gt_mask = cv2.imread(f'{self.base_dir}/mask_visib/{self.id_strs[i_frame]}_{i_k:06d}.png', -1).astype(bool)

intersect = (gt_mask*mask).astype(bool)

union = (gt_mask+mask).astype(bool)

iou = float(intersect.sum())/union.sum()

if iou>best_iou:

best_iou = iou

best_gt_mask = gt_mask

ob_in_cam = cur

else:

ob_in_cam = cur

break

if use_my_correction:

if 'ycb' in self.base_dir.lower() and 'train_real' in self.color_files[i_frame]:

video_id = self.get_video_id()

if ob_id==1:

if video_id in [12,13,14,17,24]:

ob_in_cam = ob_in_cam@self.symmetry_tfs[ob_id][1]

return ob_in_cam

def load_symmetry_tfs(self):

dir = os.path.dirname(self.get_gt_mesh_file(self.ob_ids[0]))

info_file = f'{dir}/models_info.json'

with open(info_file,'r') as ff:

info = json.load(ff)

self.symmetry_tfs = {}

self.symmetry_info_table = {}

for ob_id in self.ob_ids:

self.symmetry_info_table[ob_id] = info[str(ob_id)]

self.symmetry_tfs[ob_id] = symmetry_tfs_from_info(info[str(ob_id)], rot_angle_discrete=5)

self.geometry_symmetry_info_table = copy.deepcopy(self.symmetry_info_table)

def get_video_id(self):

return int(self.base_dir.split('/')[-1])

class LinemodOcclusionReader(BopBaseReader):

def __init__(self,base_dir='/mnt/9a72c439-d0a7-45e8-8d20-d7a235d02763/DATASET/LINEMOD-O/lmo_test_all/test/000002', zfar=np.inf):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'lmo'

self.K = list(self.K_table.values())[0]

self.ob_ids = [1,5,6,8,9,10,11,12]

self.ob_id_to_names = {

1: 'ape',

2: 'benchvise',

3: 'bowl',

4: 'camera',

5: 'water_pour',

6: 'cat',

7: 'cup',

8: 'driller',

9: 'duck',

10: 'eggbox',

11: 'glue',

12: 'holepuncher',

13: 'iron',

14: 'lamp',

15: 'phone',

}

# self.load_symmetry_tfs()

def get_gt_mesh_file(self, ob_id):

mesh_dir = f'{BOP_DIR}/{self.dataset_name}/models/obj_{ob_id:06d}.ply'

return mesh_dir

class LinemodReader(LinemodOcclusionReader):

def __init__(self, base_dir='/mnt/9a72c439-d0a7-45e8-8d20-d7a235d02763/DATASET/LINEMOD/lm_test_all/test/000001', zfar=np.inf, split=None):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'lm'

if split is not None: # train/test

print("## split is not None")

with open(f'/mnt/9a72c439-d0a7-45e8-8d20-d7a235d02763/DATASET/LINEMOD/Linemod_preprocessed/data/{self.get_video_id():02d}/{split}.txt','r') as ff:

lines = ff.read().splitlines()

self.color_files = []

for line in lines:

id = int(line)

self.color_files.append(f'{self.base_dir}/rgb/{id:06d}.png')

self.make_id_strs()

self.ob_ids = np.setdiff1d(np.arange(1,16), np.array([7,3])).tolist() # Exclude bowl and mug

# self.load_symmetry_tfs()

def get_gt_mesh_file(self, ob_id):

root = self.base_dir

print(f'{root}/../')

print(f'{root}/lm_models')

print(f'{root}/lm_models/models/obj_{ob_id:06d}.ply')

while 1:

if os.path.exists(f'{root}/lm_models'):

mesh_dir = f'{root}/lm_models/models/obj_{ob_id:06d}.ply'

break

else:

root = os.path.abspath(f'{root}/../')

mesh_dir = f'{root}/lm_models/models/obj_{ob_id:06d}.ply'

break

return mesh_dir

def get_reconstructed_mesh(self, ref_view_dir):

mesh = trimesh.load(os.path.abspath(f'{ref_view_dir}/model/model.obj'))

return mesh

class YcbVideoReader(BopBaseReader):

def __init__(self, base_dir, zfar=np.inf):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'ycbv'

self.K = list(self.K_table.values())[0]

self.make_id_strs()

self.ob_ids = np.arange(1,22).astype(int).tolist()

YCB_VIDEO_DIR = os.getenv('YCB_VIDEO_DIR')

self.ob_id_to_names = {}

self.name_to_ob_id = {}

# names = sorted(os.listdir(f'{YCB_VIDEO_DIR}/models/'))

if os.path.exists(f'{YCB_VIDEO_DIR}/models/'):

names = sorted(os.listdir(f'{YCB_VIDEO_DIR}/models/'))

for i,ob_id in enumerate(self.ob_ids):

self.ob_id_to_names[ob_id] = names[i]

self.name_to_ob_id[names[i]] = ob_id

else:

names = []

if 0:

# if 'BOP' not in self.base_dir:

with open(f'{self.base_dir}/../../keyframe.txt','r') as ff:

self.keyframe_lines = ff.read().splitlines()

# self.load_symmetry_tfs()

'''for ob_id in self.ob_ids:

if ob_id in [1,4,6,18]: # Cylinder

self.geometry_symmetry_info_table[ob_id] = {

'symmetries_continuous': [

{'axis':[0,0,1], 'offset':[0,0,0]},

],

'symmetries_discrete': euler_matrix(0, np.pi, 0).reshape(1,4,4).tolist(),

}

elif ob_id in [13]:

self.geometry_symmetry_info_table[ob_id] = {

'symmetries_continuous': [

{'axis':[0,0,1], 'offset':[0,0,0]},

],

}

elif ob_id in [2,3,9,21]: # Rectangle box

tfs = []

for rz in [0, np.pi]:

for rx in [0,np.pi]:

for ry in [0,np.pi]:

tfs.append(euler_matrix(rx, ry, rz))

self.geometry_symmetry_info_table[ob_id] = {

'symmetries_discrete': np.asarray(tfs).reshape(-1,4,4).tolist(),

}

else:

pass'''

def get_gt_mesh_file(self, ob_id):

if 'BOP' in self.base_dir:

mesh_file = os.path.abspath(f'{self.base_dir}/../../ycbv_models/models/obj_{ob_id:06d}.ply')

else:

mesh_file = f'{self.base_dir}/../../ycbv_models/models/obj_{ob_id:06d}.ply'

return mesh_file

def get_gt_mesh(self, ob_id:int, get_posecnn_version=False):

if get_posecnn_version:

YCB_VIDEO_DIR = os.getenv('YCB_VIDEO_DIR')

mesh = trimesh.load(f'{YCB_VIDEO_DIR}/models/{self.ob_id_to_names[ob_id]}/textured_simple.obj')

return mesh

mesh_file = self.get_gt_mesh_file(ob_id)

mesh = trimesh.load(mesh_file, process=False)

mesh.vertices *= 1e-3

tex_file = mesh_file.replace('.ply','.png')

if os.path.exists(tex_file):

from PIL import Image

im = Image.open(tex_file)

uv = mesh.visual.uv

material = trimesh.visual.texture.SimpleMaterial(image=im)

color_visuals = trimesh.visual.TextureVisuals(uv=uv, image=im, material=material)

mesh.visual = color_visuals

return mesh

def get_reconstructed_mesh(self, ob_id, ref_view_dir):

mesh = trimesh.load(os.path.abspath(f'{ref_view_dir}/ob_{ob_id:07d}/model/model.obj'))

return mesh

def get_transform_reconstructed_to_gt_model(self, ob_id):

out = np.eye(4)

return out

def get_visible_cloud(self, ob_id):

file = os.path.abspath(f'{self.base_dir}/../../models/{self.ob_id_to_names[ob_id]}/visible_cloud.ply')

pcd = o3d.io.read_point_cloud(file)

return pcd

def is_keyframe(self, i):

color_file = self.color_files[i]

video_id = self.get_video_id()

frame_id = int(os.path.basename(color_file).split('.')[0])

key = f'{video_id:04d}/{frame_id:06d}'

return (key in self.keyframe_lines)

class TlessReader(BopBaseReader):

def __init__(self, base_dir, zfar=np.inf):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'tless'

self.ob_ids = np.arange(1,31).astype(int).tolist()

self.load_symmetry_tfs()

def get_gt_mesh_file(self, ob_id):

mesh_file = f'{self.base_dir}/../../../models_cad/obj_{ob_id:06d}.ply'

return mesh_file

def get_gt_mesh(self, ob_id):

mesh = trimesh.load(self.get_gt_mesh_file(ob_id))

mesh.vertices *= 1e-3

mesh = trimesh_add_pure_colored_texture(mesh, color=np.ones((3))*200)

return mesh

class HomebrewedReader(BopBaseReader):

def __init__(self, base_dir, zfar=np.inf):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'hb'

self.ob_ids = np.arange(1,34).astype(int).tolist()

self.load_symmetry_tfs()

self.make_scene_ob_ids_dict()

def get_gt_mesh_file(self, ob_id):

mesh_file = f'{self.base_dir}/../../../hb_models/models/obj_{ob_id:06d}.ply'

return mesh_file

def get_gt_pose(self, i_frame:int, ob_id, use_my_correction=False):

logging.info("WARN HomeBrewed doesn't have GT pose")

return np.eye(4)

class ItoddReader(BopBaseReader):

def __init__(self, base_dir, zfar=np.inf):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'itodd'

self.make_id_strs()

self.ob_ids = np.arange(1,29).astype(int).tolist()

self.load_symmetry_tfs()

self.make_scene_ob_ids_dict()

def get_gt_mesh_file(self, ob_id):

mesh_file = f'{self.base_dir}/../../../itodd_models/models/obj_{ob_id:06d}.ply'

return mesh_file

class IcbinReader(BopBaseReader):

def __init__(self, base_dir, zfar=np.inf):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'icbin'

self.ob_ids = np.arange(1,3).astype(int).tolist()

self.load_symmetry_tfs()

def get_gt_mesh_file(self, ob_id):

mesh_file = f'{self.base_dir}/../../../icbin_models/models/obj_{ob_id:06d}.ply'

return mesh_file

class TudlReader(BopBaseReader):

def __init__(self, base_dir, zfar=np.inf):

super().__init__(base_dir, zfar=zfar)

self.dataset_name = 'tudl'

self.ob_ids = np.arange(1,4).astype(int).tolist()

self.load_symmetry_tfs()

def get_gt_mesh_file(self, ob_id):

mesh_file = f'{self.base_dir}/../../../tudl_models/models/obj_{ob_id:06d}.ply'

return mesh_file

运行run_linemod.py:

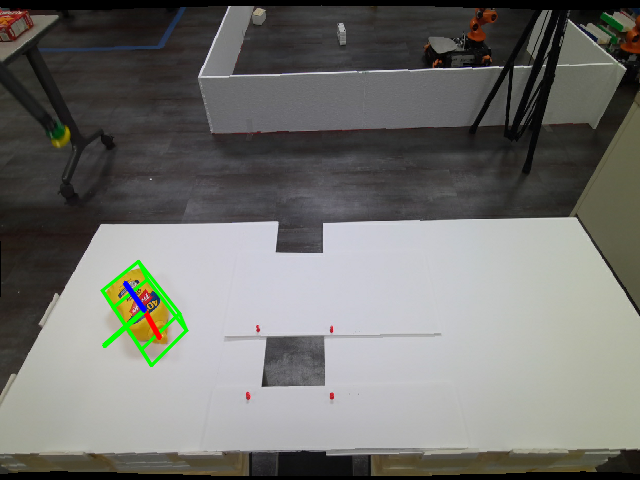

python run_linemod.py能看到文件夹model_free_ref_views/lm_test_all/000015/track_vis/

里面存放可视化结果:

分享完成~

本文先介绍到这里,后面会分享“6D位姿估计”的其它数据集、算法、代码、具体应用示例。